Bypassing Content-Disposition: attachment

# Intro

Recently, I played SAS CTF with the

Bushwhackers team. It was an extremely high-quality event with many hard and

interesting tasks. One of the web tasks was called GigaUpload. It was a

client-side challenge which had several steps, but we here will focus on gaining

XSS with CRLF injection after the Content-Disposition: attachment header. You

can read the complete (and really good!) writeup

here if you want.

Unfortunately, we didn’t manage to solve the task in time… But I really wanted

to understand every part of the solution, so I did this mini-research.

# Problem statement

- There is a web server

- You can inject anything in the server’s HTTP response after the

Content-Disposition: attachmentheaderHTTP/1.0 200 OK Server: BaseHTTP/0.6 Python/3.12.9 Date: Mon, 26 May 2025 23:41:28 GMT Content-Disposition: attachment X-File-Name: <injection point here> Other-Header: value - The goal is to steal the URL which the bot is going to visit at some point in the future. This would be possible if we gain XSS and install a service worker or use other interesting HTTP response headers.

# Possible solutions

Right now I am aware of three ways you can solve this:

- The

NELheader - Service worker installation via the Link header

- A second

Content-Dispositionheader in some configurations

#

1. The NEL header

The author’s solution involved this technique. NEL stands for Network Error Log.

It is an experimental technology

which can be used to report details about failed and successful requests to an

origin and its subdomains. Those details include the referer and URL of the

request. The Report-To header should also be set to specify reporting

endpoints. Also, these features currently work only in chromium-based browsers.

# 2. Service worker installation via the Link header

Injection of a single header is enough to achieve XSS using the Payment Request API. All the details of this technique were described in this excellent blog post by Slonser, so I won’t go into detail here.

#

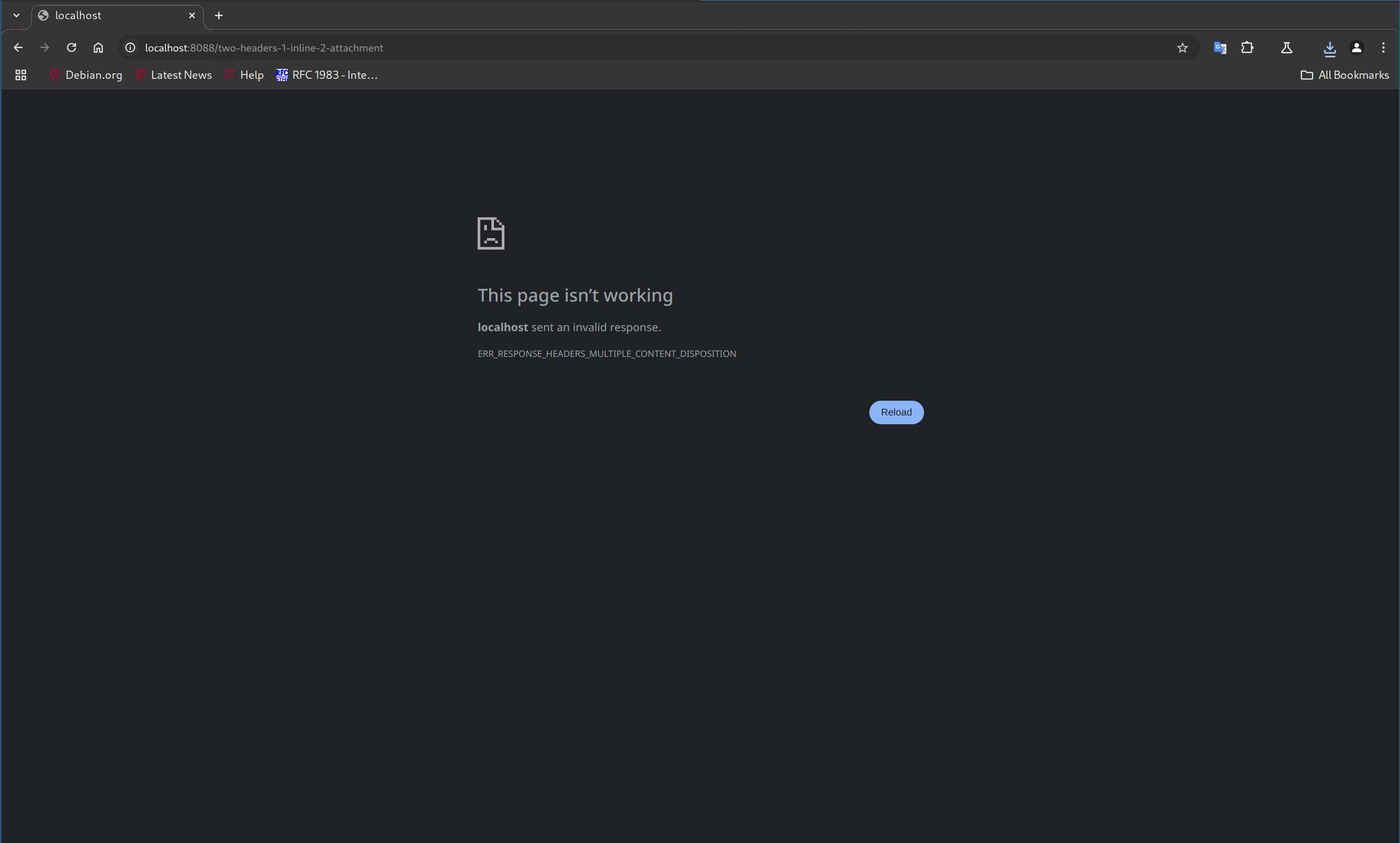

3. A second Content-Disposition header

After getting frustrated by not solving the task, we became even more frustrated

after reading the writeup. It described the exact same technique we tried

several times and failed! If you inject a second Content-Disposition header

with a differing value to the HTTP response, chromium normally won’t load the

page and show this error:

The error code here is ERR_RESPONSE_HEADERS_MULTIPLE_CONTENT_DISPOSITION - it

obvously indicates that chromium does not accept multiple Content-Disposition

headers. This is a security feature implemented in

this chromium issue and is the

behavior we got while performing local tests.

However, after reading the writeup I tried to reproduce this issue on the still-not-terminated task instance… Of course it worked. The page was loaded and rendered as inline HTML. But what was the difference between the local and remote setup? Can you spot it?

Remote

HTTP/2 200 OK Server: ycalb Date: Mon, 26 May 2025 23:28:14 GMT Content-Type: text/html Content-Disposition: attachment X-File-Name: Content-Disposition: inline A: B X-File-Encoding: utf7 X-File-Content-Type: image/png X-File-Size: 9 Access-Control-Allow-Origin: https://gigaupload.task.sasc.tf<script>console.log(111)</script>

Local

HTTP/1.0 200 OK Server: BaseHTTP/0.6 Python/3.12.9 Date: Mon, 26 May 2025 23:15:16 GMT Content-Disposition: attachment X-File-Name: Content-Type: text/html Content-Disposition: inline A: B X-File-Encoding: utf7 X-File-Content-Type: image/png X-File-Size: 9<script>console.log(111)</script>

Apart from the Date, Server, and other non-relevant headers, these responses

use different HTTP versions. To check if this influenced browser behavior, I

hacked together a golang server which served different combinations of

Content-Disposition headers on different HTTP versions.

POC server code:

To run the server, you will need to generate certificates first:

openssl req -x509 -newkey rsa:4096 -keyout certs/server.key -out certs/server.crt -days 365 -nodes -subj "/CN=localhost"

package main

import (

"crypto/tls"

"fmt"

"log"

"net/http"

"path/filepath"

)

func main() {

mux := http.NewServeMux()

mux.HandleFunc("/invalid-header", func(w http.ResponseWriter, r *http.Request) {

w.Header().Add("Content-Disposition", "attachment,hui")

fmt.Fprintf(w, "Hello from http server!\nProtocol: %s\n", r.Proto)

})

mux.HandleFunc("/two-headers-1-inline-2-attachment", func(w http.ResponseWriter, r *http.Request) {

w.Header().Add("Content-Disposition", "inline")

w.Header().Add("Content-Disposition", "attachment")

fmt.Fprintf(w, "Hello from http server!\nProtocol: %s\n", r.Proto)

})

mux.HandleFunc("/two-headers-1-attachment-2-inline", func(w http.ResponseWriter, r *http.Request) {

w.Header().Add("Content-Disposition", "attachment")

w.Header().Add("Content-Disposition", "inline")

fmt.Fprintf(w, "Hello from http server!\nProtocol: %s\n", r.Proto)

})

mux.HandleFunc("/one-header-inline", func(w http.ResponseWriter, r *http.Request) {

w.Header().Add("Content-Disposition", "inline")

fmt.Fprintf(w, "Hello from http server!\nProtocol: %s\n", r.Proto)

})

mux.HandleFunc("/one-header-attachment", func(w http.ResponseWriter, r *http.Request) {

w.Header().Add("Content-Disposition", "attachment")

fmt.Fprintf(w, "Hello from http server!\nProtocol: %s\n", r.Proto)

})

mux.HandleFunc("/", func(w http.ResponseWriter, r *http.Request) {

fmt.Fprintf(w, `

<html>

<body>

<a href="/invalid-header">invalid-header</a>

<br>

<a href="/two-headers-1-inline-2-attachment">two-headers-1-inline-2-attachment</a>

<br>

<a href="/two-headers-1-attachment-2-inline">two-headers-1-attachment-2-inline</a>

<br>

<a href="/one-header-inline">one-header-inline</a>

<br>

<a href="/one-header-attachment">one-header-attachment</a>

<br>

</body>

</html>

`)

})

certFile := filepath.Join("certs", "server.crt")

keyFile := filepath.Join("certs", "server.key")

tlsConfig := &tls.Config{

MinVersion: tls.VersionTLS12,

CurvePreferences: []tls.CurveID{

tls.CurveP521,

tls.CurveP384,

tls.CurveP256,

},

PreferServerCipherSuites: true,

CipherSuites: []uint16{

tls.TLS_ECDHE_RSA_WITH_AES_256_GCM_SHA384,

tls.TLS_ECDHE_RSA_WITH_AES_128_GCM_SHA256,

},

}

http2server := &http.Server{

Addr: ":8443",

Handler: mux,

TLSConfig: tlsConfig,

}

http1server := &http.Server{

Addr: ":8088",

Handler: mux,

}

go func() {

log.Printf("Starting HTTP/2 server on :8443")

log.Fatal(http2server.ListenAndServeTLS(certFile, keyFile))

}()

log.Printf("Starting HTTP/1.1 server on :8088")

log.Fatal(http1server.ListenAndServe())

}

Experiments showed that chromium does not load any HTTP/1.1 page but those

with a single valid header, but loads all HTTP/2 pages with multiple

Content-Disposition headers inline.

Table with experiment results for every path and protocol

| Protocol | Path | Result |

|---|---|---|

| HTTP/1.1 | /invalid-header | ❌ Does not load |

| HTTP/1.1 | /two-headers-1-inline-2-attachment | ❌ Does not load |

| HTTP/1.1 | /two-headers-1-attachment-2-inline | ❌ Does not load |

| HTTP/1.1 | /one-header-attachment | ✅ Loads (as attachment) |

| HTTP/1.1 | /one-header-inline | ✅ Loads (inline) |

| HTTP/2 | /invalid-header | ✅ Loads (inline) |

| HTTP/2 | /two-headers-1-inline-2-attachment | ✅ Loads (inline) |

| HTTP/2 | /two-headers-1-attachment-2-inline | ✅ Loads (inline) |

| HTTP/2 | /one-header-attachment | ✅ Loads (as attachment) |

| HTTP/2 | /one-header-inline | ✅ Loads (inline) |

Is this the intended behavior? It seems like the only thing that can answer our question is the chromium source code.

# Down the chromium hole

OK, here is what I’ve found after several hours of browsing the chromium sources:

- HTTP requests in chromium are handled in this loop. It dispatches all stages of HTTP transaction handling from connection establishment to response parsing.

- We are interested in the code responsible for reading headers, handled in this case with the DoReadHeaders function.

- DoReadHeaders calls ReadResponseHeaders, whose implementation is defined in

the stream classes (see

Overridden Byfor the ReadResponseHeaders function). - While

http_basic_streamcalls HTTPStreamParser->ReadResponseHeaders which checks for duplicated sensitive headers,spdy_http_stream(SPDY is the name of the protocol superseded by HTTP/2. Some HTTP/2-related logic is handled under this name) parses headers in the OnHeadersReceived callback and does not check for duplicate content-disposition.quic_http_stream(HTTP/3) uses the same parser implementation.

To sum up, even though the HTTP/2 parsing implementation checks for duplicate

Location headers, duplicate Content-Disposition checks were left out. So

when checking for disposition types of HTTP/2 responses, chromium will use the

normalized header value -

all values of this header joined with a comma.

This is an invalid value as per the

spec, so the browser will fall back to

inline rendering.

# Should I have reported this?

Well,

- Arbitrary header injection already allows persistent XSS via link header injection (the second technique from possible solutions) so fixing it won’t solve anything.

- While browsing the chrome issue tracker I came across this bug: link header injection possible in HTTP/2. Even though this bug was awarded a small bounty, the chromium team stated that issues reliant on vulnerable servers are out of scope.

So this does not look like something that would be triaged as a severe security problem.

# Afterword

All in all, this was a really interesting small research. I am very surprised this issue was not encountered and reported before by anybody else as this should be a pretty common scenario for exploiting CRLF injections. Maybe I’m wrong here and just didn’t manage to find relevant materials?.. Anyway, it’s sad that we did not manage to come up with these bypasses during the CTF. Probably next time we should be extra careful about the testing environment, be smarter and make fewer mistakes. Also play more SAS CTF as it is really good and the Drovosec team did a great job.